What is Information Theory?

Information theory is the study of the transmission and communication of data. It focuses on reliability and efficiency. Information theory and coding theory are closely related topics, and they will both be discussed.

Both general information theory and coding theory can be split into two categories: compression and error-correction. Compression, which might also be referred to as source coding, represents the efficiency part of information theory. While error-correcting, which might also be referred to as channel coding, represents the reliability part of information theory,

What is Gray Code & Motivation?

Gray code is also known as reflect Binary code. Frank Gray made gray code to combat the potential syncronization issues with bit switching. In standard binary, “011” is 3, and “100” is 4. In order to get from 3 to 4, a switch must occur at every position. Getting all the bits to flip in sync is difficult as the number grows.

In gray code, the number of bit switches that need to occur between neighboring numbers is only 1.

Converting Gray Code to Binary Code:

Converting gray code to binary code is easy. The conversion is only comprised of a few instructions:

Put down the most signifgant digit (the first digit in a binary sequence it is 1 in 100 and 0 in 011)

For every continuous pair, compare those two bits.

If the two bits are the same, then put a 0.

If the two bits are different, then put down a 1.

Converting Binary Code to Gray Code:

Converting binary code back to gray code is almost the same as converting it the other way.

Put down the most significant digit from the gray code.

Now compare the next digit in the gray code to the current (gray code index 1) in the binary code.

If the two bits are the same, then put a 0.

If the two bits are different, then put down 1.

What is the Prufer Code?

Prufer code is a way to condense a labeled graph into a unique sequence.

Prufer Code:

In order to generate the preferred code for a graph, follow the following instructions:

Create an empty array.

Get the degree (the number of connections) of all nodes.

Find the leaf node (1 connection) of the lowest label.

Then, add the node on the other side of its one connection to the Prufer code and remove the leaf. When removing the leaf node, make sure to update the degree of the node on the other side of the connection.

Repeat Steps 3-5.

In order to generate the graph from the Prufer code, follow the following instructions:

There will always be two nodes missing from the prufer sequence, so create len (prufer sequence) + 2 new labeled nodes.

Find the smallest node that isn’t in the prufer sequence and connect it to the first node in the prufer sequence (which isn’t deleted). Remove the value from the Prufer sequence that was just used.

Repeat step 2.

What are Hamming Codes?

Hamming codes were the very first method of self-correcting messages and error detection. When information is transferred across a noisy channel, some of the bits may have been flipped. Hamming codes are able to detect one error and fix it. It is also able to recognize if there are >1 errors, but it will not be able to fix them.

Hamming codes aren’t common anymore; there has been a lot of development since they were discovered. Items like the CD use the reed-soloman method to correct errors that may have been produced by scratches on them.

How Hamming Codes Work:

Hamming codes rely solely on multiple parity checks. All bits that are a power of 2 (when they’re 0 indexed) will be reserved for parity checks. The 0th index is also reserved for an extension to the hamming codes. So, in a 16-bit block, 5 of them are used for checking and 11 are used to send a message. In a 256-bit block, only nine of the bits are set aside for error correction.

Each of these parity bits is assigned a region of the bits. Parity is the state of something. What value each of the parity bits is set to is determined by the two following rules:

If there are an odd number of ones in its region, then it will be set to 1.

If there are an even number of ones in its region, then it will be set to 0.

For example, if one of the parity bits is set to 1 (the odd number of ones), But, in the region that that bit is responsible for, there are an even number of ones for which the receiver is aware that there must be at least one error.

If there is only one error present with a couple of yes or no questions based on the parity bits, the error can always be located. Observe the following two questions:

Based on whether each parity bit actually represents the value it says it does, it is able to pin down which column the error must have taken place in. If the pairty bit is wrong over that region, then it must exist in that region (2 columns are in questions). Based on the second question, it will always take one of the two questioned columns to prove that one has to be the error column or disprove that one of the columns is the error column.

The same thing happens for the horizontal parity bit questions. After processing the question, it is able to determine which row the error occurred in. With both the information for the column and the row, the exact location can be determined.

However, there is still a major issue with the algorithm. Prior to the 0th bit, I was doing much. But it actually plays a big part in determining whether there were multiple errors present. In this case, the 0th bit serves as a parity bit for the entire block. Its value is determined by the same rule as the other parity bits.

In the case of one error, the parity of the entire block would be wrong, but that doesn’t matter as the other parity bits would have already corrected the error. In the case of two errors, the normal parity bits won’t be able to find the error locations. The parity of the block will be correct with two errors. Now, with the information that the parity is correct and there had to have been some sort of error, it is confirmed that there was more than one error.

Source – Channel Separation:

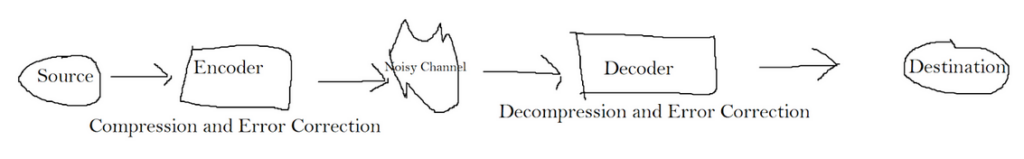

The transfer of data can be modeled like the following.

We start with a source, which sends its information to the encoder. The encoder will prepare it and then send it over a channel. This channel is potentially noisy. A noisy channel might mean that some of the encoded data might be changed or lost. After leaving the channel, the decoder will try its best to decode the information. Once decoded to the best of its ability, it will send it on to the destination.

This scenario deals with compression and error correction. The encoder will compress the information and check for errors. Dealing with the efficiency and reliability of data transfer. The decoder will do the opposite. It will decompress the information and check for errors again.

A minute detail about the diagram is the direction of the arrows. The above diagram describes a system that has forward error correction (FEC). In forward error correction, the decoder will try its best to find and fix errors. But it will never contact the source and ask it to resend any of the information. These systems are common for services that run in real-time. It would be quite annoying to wait for a YouTube video to ask for lost information almost every second. Sometimes it’s a worthwhile trade to decrease reliability for efficiency.

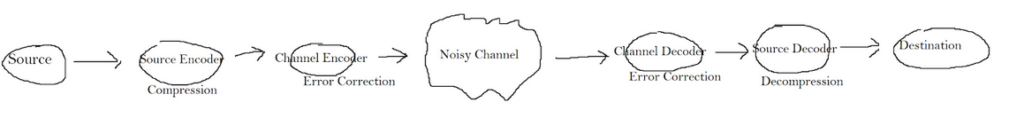

Claude Shannon, one of the primary founders of information theory, made a claim about this system. He said the system would be equivalent if we broke the encoder and decoder into two separate parts. If we broke the encoder into a source encoder and a channel encoder, Along with separating the decoder into a source decoder and a channel decoder, we would be left with the same problem. This claim would be proven and named the “Source-Channel Separation Theorem.” Refer to the following diagram:

The source encoder does compression, and the channel encoder does error correction coding. The channel decoder does the inverse of error correction coding, and the source decoder decompresses the information.

The theorem, more informally, states that the channel encoder doesn’t rely on the source encoder. Both can be designed independently, and the system would work the same as if they were developed together.

You don’t necessarily need to make a full encoder and decoder. Instead, you could create a channel source encoder/decoder and a channel encoder/decoder.

When encoding, the channel encoder doesn’t need to know anything about the inner workings of the source encoder. This works exactly the same for the decoding portion.